Posts Tagged EA874

Welcome to the Virtual World…

The Enterprise Technology Architecture, Part 2

There are many times when we have certain expectations, and yet reality proves to be something different than expected. Case in point, the image above shows us an expectation of a “virtual world” based on the 1999 movie, The Matrix, in contrast with an example of the recent personal virtual reality technology. What we expected (or hoped for) is not what we got. In the same way, this blog entry is not about virtual reality, but a discussion about system virtualization.

There is no spoon….

In general, virtualization refers to the technology that creates a logical (virtual) system instead of a physical system, specifically in the field of computer hardware. This hardware can be servers, storage devices, or even network resources. This practice started in a primitive form back in the 1960s on mainframe computers, but has gradually increased in scope and capabilities and is a mainstream technology used today. In a simple breakdown, virtualization falls into the following categories:

- Platform/Hardware virtualization – A full virtual machine acts as a real computer with it’s own operating system.

- Desktop virtualization – A logical desktop which can run on any physical client while processing takes place on a separate host system.

- Application Virtualization – An application runs off a client machine, but in reality is being hosted on larger system which typically has greater processing power.

Interestingly enough, this has a direct correlation to the different service types provided in the cloud-computing environments, as I talked about a number of weeks ago in Let’s get Saassy.

- SaaS – Software as a Service: The capability provided to the consumer is to use the provider’s applications running on a cloud infrastructure. The applications are accessible from various client devices through either a thin client interface, such as a web browser (e.g., web-based email), or a program interface. The consumer does not manage or control the underlying cloud infrastructure including network, servers, operating systems, storage, or even individual application capabilities, with the possible exception of limited user-specific application configuration settings.

- PaaS – Platform as a Service: The capability provided to the consumer is to deploy onto the cloud infrastructure consumer-created or acquired applications created using programming languages, libraries, services, and tools supported by the provider. The consumer does not manage or control the underlying cloud infrastructure including network, servers, operating systems, or storage, but has control over the deployed applications and possibly configuration settings for the application-hosting environment.

- IaaS – Infrastructure as a Service: The capability provided to the consumer is to provision processing, storage, networks, and other fundamental computing resources where the consumer is able to deploy and run arbitrary software, which can include operating systems and applications. The consumer does not manage or control the underlying cloud infrastructure but has control over operating systems, storage, and deployed applications; and possibly limited control of select networking components (e.g., host firewalls).

This direct correlation is due to the fact that most cloud computing technology heavily relies on virtualization to function. “Virtualization is a foundational element of cloud computing and helps deliver on the value of cloud computing. While cloud computing is the delivery of shared computing resources, software or data — as a service and on-demand through the Internet” (Angeles, 2014).

So how does virtualization tie back into Enterprise Technology architecture and Enterprise Architecture in general?

In response to a question regarding the impact of virtualization and cloud computing on Enterprise Architecture, John Zachman (2011), creator of the Zachman Framework, stated:

The whole idea of Enterprise Architecture is to enable the Enterprise to address orders of magnitude increases in complexity and orders of magnitude increases in the rate of change. Therefore, if you have Enterprise Architecture, and if you have made that Enterprise Architecture explicit, and if you have designed it correctly, you should be able to change the Enterprise and/or its formalisms (that is, its systems, manual or automated) with minimum time, minimum disruption and minimum cost.

Adrian Grigoriu, author of An Enterprise Architecture Development Framework, further expounded on the benefits virtualization brings to Enterprise Architecture (2008).

In this fast moving world, the business of an Enterprise, its logic, should not depend on IT technology, that is what it is or its implementation. Business activities should be performed regardless of technology and free from tomorrow’s new IT hype. Why be concerned whether it is mainframe, COBOL, JavaEE or .NET, Smalltalk, 4GL or AS400 RPG! At the same cost/performance level, IT should decide technology realizing that ongoing change is inevitable.

Business should be willing to adopt technology virtualization to be able to interact with IT technology at a service level, where the negotiation between business and IT is performed in a communication language structured in terms of capabilities, relative feature merits, and their cost. IT functional and non-functional capabilities will be delivered under SLAs at an agreed price. IT virtualization adds an interface layer hiding the IT implementation complexity and technology, while bridging the divide between business and IT.

Even back in 2008 and 2011, Enterprise Architecture leaders realized the benefits that could be gained from virtualization. Considering the growth of the technology since then, the benefits have exponentially multiplied. EA ultimately relies on technology itself to be efficient, therefore, technology that allows for rapid changes will always be of primary importance. In addition, virtualization brings reduced complexity, lowered costs, simplified IT management, improved security and flexible IT service delivery to the organization. These benefits not only help EA, but IT and the organization in general.

References:

Angeles, Susan. (2014). Virtualization vs. Could Computing: What’s the Difference? Business News Daily. Retrieved from http://www.businessnewsdaily.com/5791-virtualization-vs-cloud-computing.html

Zachman, John. (2011). Cloud Computing and Enterprise Architecture. Zachman Internaltional. Retrieved from https://www.zachman.com/ea-articles-reference/55-cloud-computing-and-enterprise-architecture-by-john-a-zachman

Grigoriu, Adrian. (2008). The Virtualization of the Enterprise. BPTrends. Retrieved from http://www.bptrends.com/the-virtualization-of-the-enterprise/

Let’s Talk About Tech, Baby…

The Enterprise Technology Architecture, Part 1

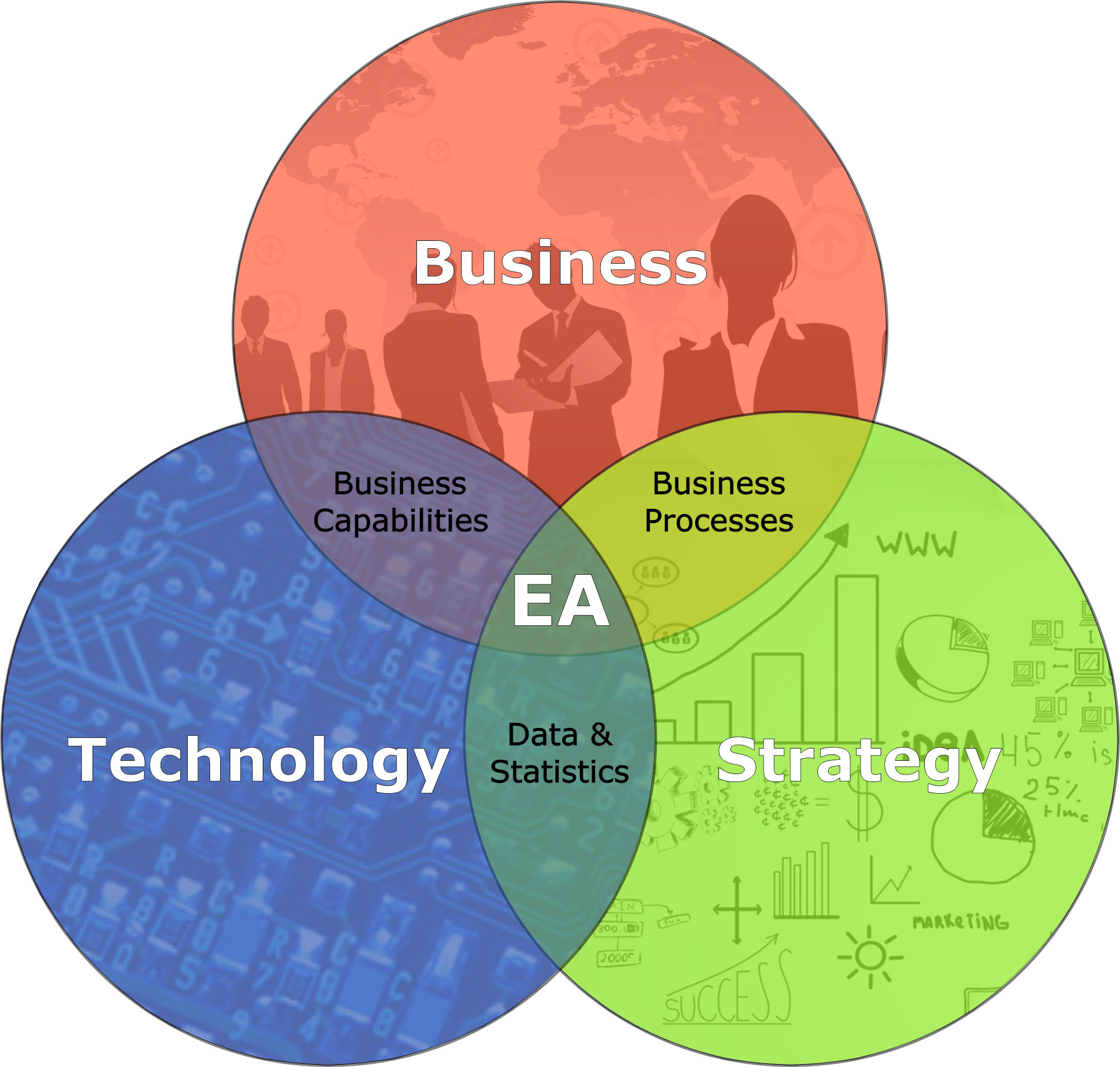

Back in EA871, we were introduced to the concept of Enterprise Architecture and how it holistically links strategy, business and technology together. I’ve frequently described the Masters program to my friends and associates as the combination of IT, an MBA program and Project Management.

EA has been defined by various technology organizations as:

- Gartner – Enterprise architecture is a discipline for proactively and holistically leading enterprise responses to disruptive forces by identifying and analyzing the execution of change toward desired business vision and outcomes. EA delivers value by presenting business and IT leaders with signature-ready recommendations for adjusting policies and projects to achieve target business outcomes that capitalize on relevant business disruptions. (http://www.gartner.com/it-glossary/enterprise-architecture-ea/)

- MIT Center for Information Systems Research – The organizing logic for business processes and IT infrastructure reflecting the integration and standardization requirements of the firm’s operating model. (http://cisr.mit.edu/research/research-overview/classic-topics/enterprise-architecture/)

- Microsoft – An enterprise architecture is a conceptual tool that assists organizations with the understanding of their own structure and the way they work. It provides a map of the enterprise and is a route planner for business and technology change. (https://msdn.microsoft.com/en-us/library/ms978007.aspx)

For me, the easiest way to describe EA is the intersection of Business, Strategy and Technology:

We’ve talked about various aspects of the strategies of EA, but now let’s talk about the Technology. What do we actually mean by technology architecture? Michael Platt, senior engineer at Microsoft defined it within the framework of Enterprise Architecture:

A technology architecture is the architecture of the hardware and software infrastructure that supports the organization and implements the operational (or non functional) requirements, particularly the application and information architectures of the organization. It describes the structure and inter-relationships of the technologies used, and how those technologies support the operational requirements of the organization.

A good technology architecture can provide security, availability, and reliability, and can support a variety of other operational requirements, but if the application is not designed to take advantage of the characteristics of the technology architecture, it can still perform poorly or be difficult to deploy and operate. Similarly, a well-designed application structure that matches business process requirements precisely—and has been constructed from reusable software components using the latest technology—may map poorly to an actual technology configuration, with servers inappropriately configured to support the application components and network hardware settings unable to support information flow. This shows that there is a relationship between the application architecture and the technology architecture: a good technology architecture is built to support the specific applications vital to the organization; a good application architecture leverages the technology architecture to deliver consistent performance across operational requirements. (Platt, 2002)

In other words, it’s not the hardware or software themselves, but the underlying system framework. In the same way that EA provides standards and guidelines for technology in business, the Enterprise Technology Architecture (ETA) focuses on standards and guidelines for the specific technologies used within the enterprise. Gartner defines ETA as “the enterprise technology architecture (ETA) viewpoint defines reusable standards, guidelines, individual parts and configurations that are technology-related (technical domains). ETA defines how these should be reused to provide infrastructure services via technical domains.”

So ETA is a formalized set of hardware & software, which meets various business objectives and goals, and includes the various standards and guidelines that govern the acquisition, preparation and use of said hardware and software. These can be servers, middle-ware, products, services, procedures and even policies. To be clear, ETA is not the same as System Architecture. System Architecture deals with specific applications and data, along with the business processes which they support. ETA is the technological framework which supports and enables those application and data services.

References:

Platt, M. (2002). Microsoft Architecture Overview. Retrieved from https://msdn.microsoft.com/en-us/library/ms978007.aspx

With Great Power Comes Great Responsibility

The Enterprise Data Architecture, Part 3

Every year, we face challenge after challenge regarding data security. Whether it’s personal identity theft, corporate data hacking, ransomware or industrial espionage, it seems like for every step forward, we take two-three steps backwards. This has a large impact not only on private consumers, but on the business world in general.

For consumers, the biggest issue being dealt with is Identity Fraud. With the increasing amount of Identity theft & fraud, consumers are facing more and more challenges to keep their personal data safe. More and more data is being collected from the consumer, from browsing habits to shopping trends. Additionally, critical personal information such as credit card information, phone numbers, address, or even occasionally social security numbers are being used on various online websites. Let’s not even start a discussion about the credit card skimmers at various retailers….

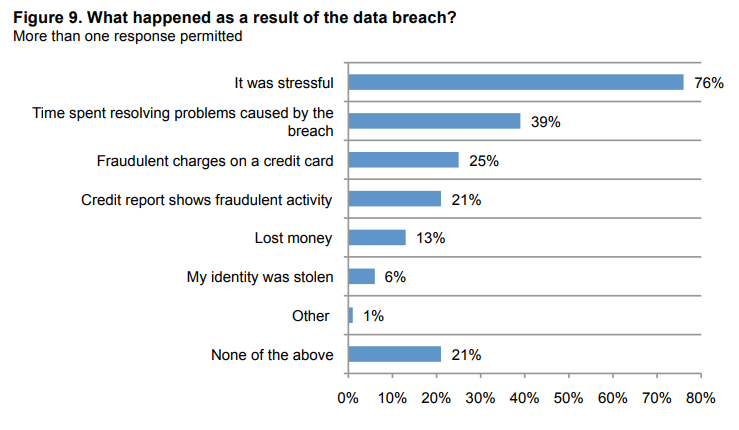

And what happens after your personal data has been stolen…?

Image Source: Ponemon Institute

On the business side, it appears that no one is safe. With the recent hack of Equifax, we have seen tremendous amounts of data stolen that could be devastating to consumers. A reported 143 million people’s data was taken, potentially including Social Security numbers. And the worse part? None of us even gave Equifax permission to have our data to begin with. The list of hacks for 2017, according to ZDNet, is frightening:

- Equifax – 143 million accounts

- Verizon – 14+ million accounts

- Bell Canada – 1.9 million accounts

- Edmodo – 77 million accounts

- Handbrake (video encoder software for Mac) – unknown number of users

- Wonga – 270 thousand accounts

- Wannacry ransomware – 200 thousand computers

- Sabre – thousands of business customers

- Virgin America

- Cellebrite

- Cloudfare

- iCloud

- TSA

- OneLogin

- US Air Force

The list could go on. We live in a time where data is everywhere. This is the reality of Big Data. Regardless of whether it’s your personal data or business data, it is stored somewhere, and it is potentially susceptible to to unwanted exposure. We know that hackers are going to try their best to get to that data. So what are the real challenges for the security of Big Data? And what can we do to make it more secure? According to Data Center Knowledge, here is a list of the top 9 Big Data Security challenges, and potential ways the security could be improved.

Big Data Security Challenges:

- Most distributed systems’ computations have only a single level of protection, which is not recommended.

- Non-relational databases (NoSQL) are actively evolving, making it difficult for security solutions to keep up with demand.

- Automated data transfer requires additional security measures, which are often not available.

- When a system receives a large amount of information, it should be validated to remain trustworthy and accurate; this practice doesn’t always occur, however.

- Unethical IT specialists practicing information mining can gather personal data without asking users for permission or notifying them.

- Access control encryption and connections security can become dated and inaccessible to the IT specialists who rely on it.

- Some organizations cannot – or do not – institute access controls to divide the level of confidentiality within the company.

- Recommended detailed audits are not routinely performed on Big Data due to the huge amount of information involved.

- Due to the size of Big Data, its origins are not consistently monitored and tracked.

How can Big Data Security be Improved?

- The continued expansion of the antivirus industry.

- Focus on application security, rather than device security.

- Isolate devices and servers containing critical data.

- Introduce real-time security information and event management.

- Provide reactive and proactive protection.

As stated by James Norrie, dean of the Graham School of Business at York College of Pennsylvania, “Big companies made big data happen. Now, ‘big security’ must follow, despite the costs. Regulators and legislators need to remind them through coordinated actions that they can spend it now to protect us all in advance or pay it later in big fines when they don’t. But either way, they are going to pay. Otherwise, the only ones paying will be consumers.”

Taming the Beast

The Enterprise Data Architecture, Part 2

So we know that Big data is a big problem. What can we do about it and what can we do with it?

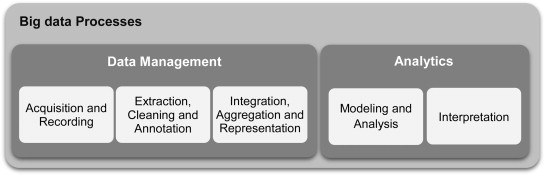

First of all, we need to have an understanding of the content of the big data and how we process this information. Gandomi & Haider (2015) state that “the over all process of extracting insights from big data can be broken down into five stages. These five stages form the two main sub-processes: data management and analytics. Data management involves processes and supporting technologies to acquire and store data and to prepare and retrieve it for analysis. Analytics, on the other hand, refers to techniques used to analyze and acquire intelligence from big data.”

Image source: Gandomi & Haider, 2015

Once we have a better understanding of the how to process Big Data, how can we efficiently leverage the information we gain?

The growth of data metrics over time in both quantity and quality is nothing new. Even in the 80s and 90s, articles were published regarding the exponential growth of data and the challenges caused by this growth (Press, 2013). The challenge that has been continually fought in all those years has been to make the available data meaningful. Big data, as we have defined it, provides many opportunities for organizations through “improved operational excellence, a better understanding of customer relations, improved risk management and new ways to drive business innovation. The business value is clear” (Buytendijk & Oestreich, 2016). Yet, investment in big data tools and initiatives are slow to materialize.

Organizations can incorporate big data initiatives into their existing business process components and artifacts. This will help establish big data as part of their Enterprise Architecture Implementation Plan and incorporate these new business strategies into the future architecture. Educating business leaders and stakeholders as to the benefits of big data initiatives will help overcome any concerns they may have (Albright, Miller & Velumani, 2016). Back in 2013, Gartner conducted a study on Big Data which showed that many leading organizations had already adopted big data initiatives to various degrees, and were starting to use the results in both innovation initiatives as well as sources of business strategy. Included in their results from that study, they found that “42% of them are developing new products and business models, while 23% are monetizing their information directly” (Walker, 2014).

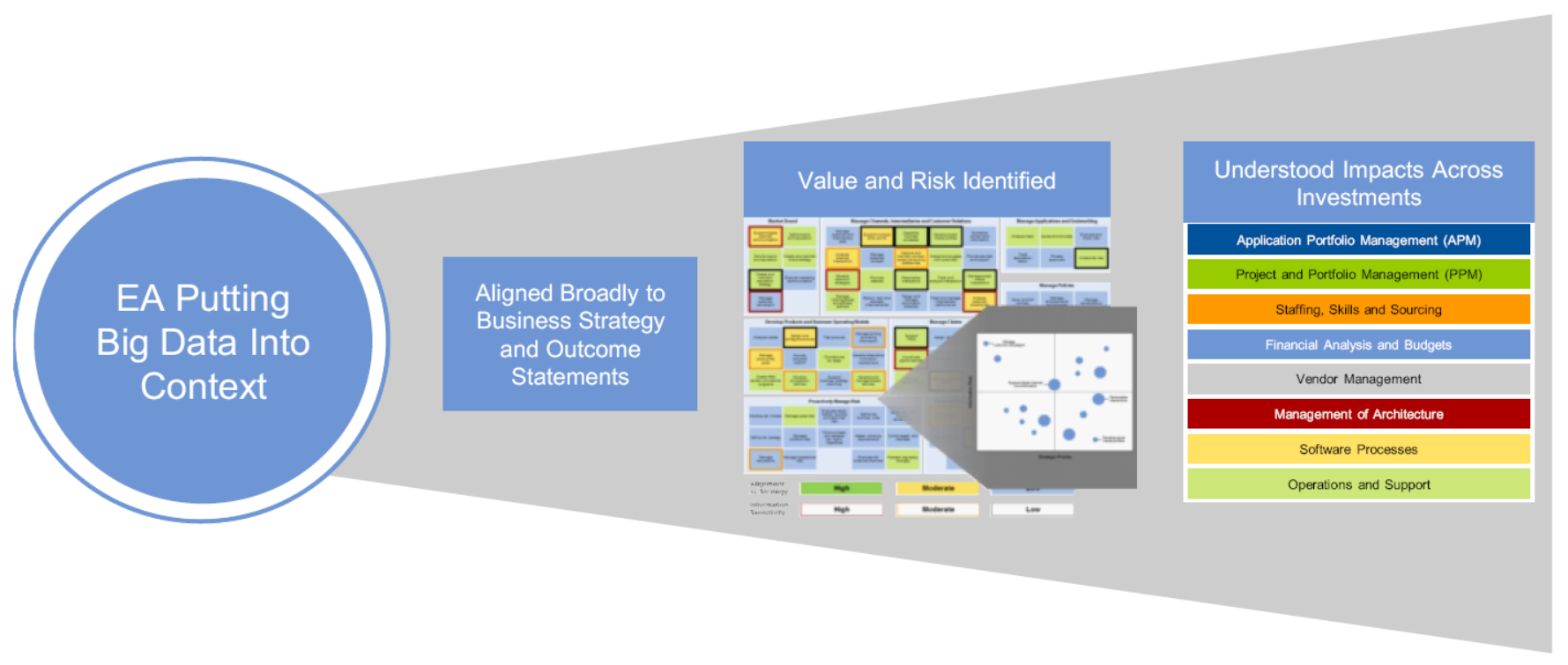

This is where Enterprise Architecture comes into play. EA is uniquely equipped, with it’s holistic view of strategy, business and technology, to not only create a strategic plan to address the potential big data opportunities, but to create a road map which positions organizations to improve operations, enable growth, and foster innovation. “Organizations that leverage EA in their big data initiatives are able to identify strategic business goals and priorities, reduce risk and maximize business value” (Walker, 2014).

Image source: Walker, 2014

Through the various efforts of EA, new technologies and new approaches to processing, storing and analyzing Big Data, organizations are gradually finding ways to uncover valuable insights. Obviously, leading the charge are leaders such as Facebook, LinkedIn and Amazon, but many other companies have joined the effort as well. “From marketing campaign analysis and social graph analysis to network monitoring, fraud detection and risk modeling, there’s unquestionably a Big Data use case out there with your company’s name on it” (Wikibon. 2012). This infographic below was put together by Wikibon to show how various business leaders have overcome their challenges and have tamed the Big Data beast.

References:

Albright, R., Miller, S. & Velumani, M. (August 2016). Internet of Things & Big Data. EA872, World Campus. Penn State University.

Buytendijk, F. & Oestreich, T. (2016) Organizing for Big Data Through Better Process and Governance. (G00274498). Gartner.

Gandomi, A. & Haider, M. (2015). Beyond the Hype: Big data concepts, methods, and analytics, International Journal of Information Management, Volume 35, Issue 2, April 2015, Pages 137-144, ISSN 0268-4012, http://dx.doi.org/10.1016/j.ijinfomgt.2014.10.007.

Press, G. (2013) A Very Short History of Big Data. Retrieved from http://www.forbes.com/sites/gilpress/2013/05/09/a-very-short-history-of-big-data/#1a7787af55da

Walker, M. (2014). Best Practices for Successfully Leveraging Enterprise Architecture in Big Data Initiatives (G00267056). Gartner.

Wikibon. (2012). Taming Big Data. Wikibon. Retrieved September 20, 2017 from https://wikibon.com/taming-big-data-a-big-data-infographic/

Come on… Big Data! Big Data!

The Enterprise Data Architecture, Part 1

With the explosive growth of the Internet and more recently, the Internet of Things (the rise of the machines…!!!), there has been a corresponding explosive growth in the amount of data available and collected from all those connected devices and software. However, along with the vast volume of data that has been generated, there has been an even greater challenge in dealing with the data. As early as 2001, various IT analysts started reporting on potential issues that would arise due to the mass amounts of data being generated. Most notably, this article by Gartner analyst, Doug Laney, summarized the various issues attached to Big Data (as it’s commonly called) into whats known as the 3Vs:

- Data Volume – The depth/breadth of data available

- Data Velocity – The speed at which new data is created

- Data Variety – The increasing variety of formats of the data

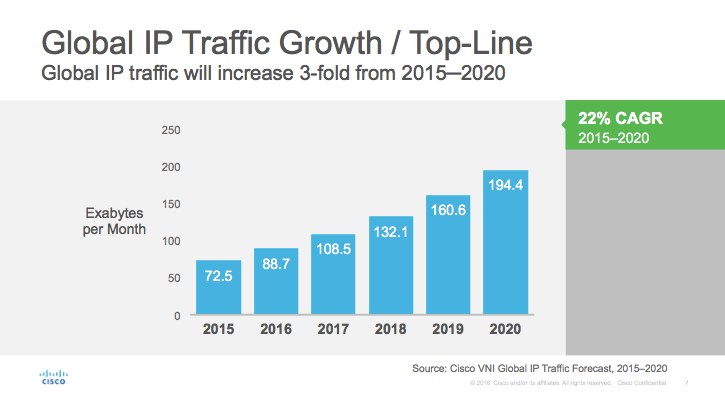

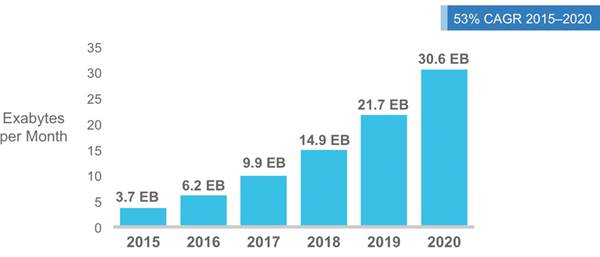

Now, 16 years after that article was written, we still face many of the same challenges. Even though technology has improved to be able to handle larger amounts of data processing and analytics, the amount of source data has continued growing at exponential rates. Cisco releases each year a Visual Networking Index which shows previous years data as well as predictions regarding the next few years as well. In their 2016 report (Cisco, 2016), the prediction was that by 2020, there would be over 8 billion handheld/personal mobile ready devices as well as over 3 billion M2M devices (Other connected devices such as GPS, Asset Tracking Systems, Device Sensors, Medical applications, etc.) in use, consuming a combined 30 million terabytes of mobile data traffic per month. And that is just mobile data.

So what are the real challenges being faced due to this exponential growth of data? Here are some facts to consider as posted by Waterford Technologies:

- According to estimates, the volume of business data worldwide, across all companies, doubles every 1.2 years.

- Poor data can cost businesses 20%–35% of their operating revenue.

- Bad data or poor data quality costs US businesses $600 billion annually.

- According to execs, the influx of data is putting a strain on IT infrastructure. 55 percent of respondents reporting a slowdown of IT systems and 47 percent citing data security problems, according to a global survey from Avanade.

- In that same survey, by a small but noticeable margin, executives at small companies (fewer than 1,000 employees) are nearly 10 percent more likely to view data as a strategic differentiator than their counterparts at large enterprises.

- Three-quarters of decision-makers (76 per cent) surveyed anticipate significant impacts in the domain of storage systems as a result of the “Big Data” phenomenon.

- A quarter of decision-makers surveyed predict that data volumes in their companies will rise by more than 60 per cent by the end of 2014, with the average of all respondents anticipating a growth of no less than 42 per cent.

- 40% projected growth in global data generated per year vs. 5% growth in global IT spending.

Datamation put together this list if Big Data Challenges of which I want to highlight a few a specifically.

- Dealing with Data Growth – As already mentioned above, the amount of data is growing year over year. So a solution that may work today, may not work well tomorrow. Investigating and investing in the technologies that can grow together with the data is critical.

- Generating Insights in a Timely Manner – Generating and collecting mass amounts of data is a specific challenge that needs to be faced. But more importantly, what do we do with all that data? If the data being generated is not being analysed and used to benefit the organization, then the effort is being wasted. New tools to analyze data are being created and released annually and these need to be evaluated to see if there are organizational benefits to be gained.

- Validating Data – Again, if your concern is generating and collecting mass amounts of data, then just as important as processing and analyzing the data, it is important to verify the integrity of the data. This is especially important in the quickly expanding field of medical records and health data.

- Securing Big Data – Additionally, the security of the data is a rapidly growing concern. As seen in recent data hacks such as the Equifax hacking attacks, the sophistication of hacking and phishing is growing at a rate equivalent to the volume of big data itself. If the sources of data are not able to provide adequate security measures, the integrity of the data can be questioned as well as many other issues.

Big Data is here to stay, and it’s only going to get bigger. Is your company ready for it?

References:

Cisco (2016). Cisco Visual Networking Index. Retrieved from http://www.cisco.com/c/en/us/solutions/service-provider/visual-networking-index-vni/index.html